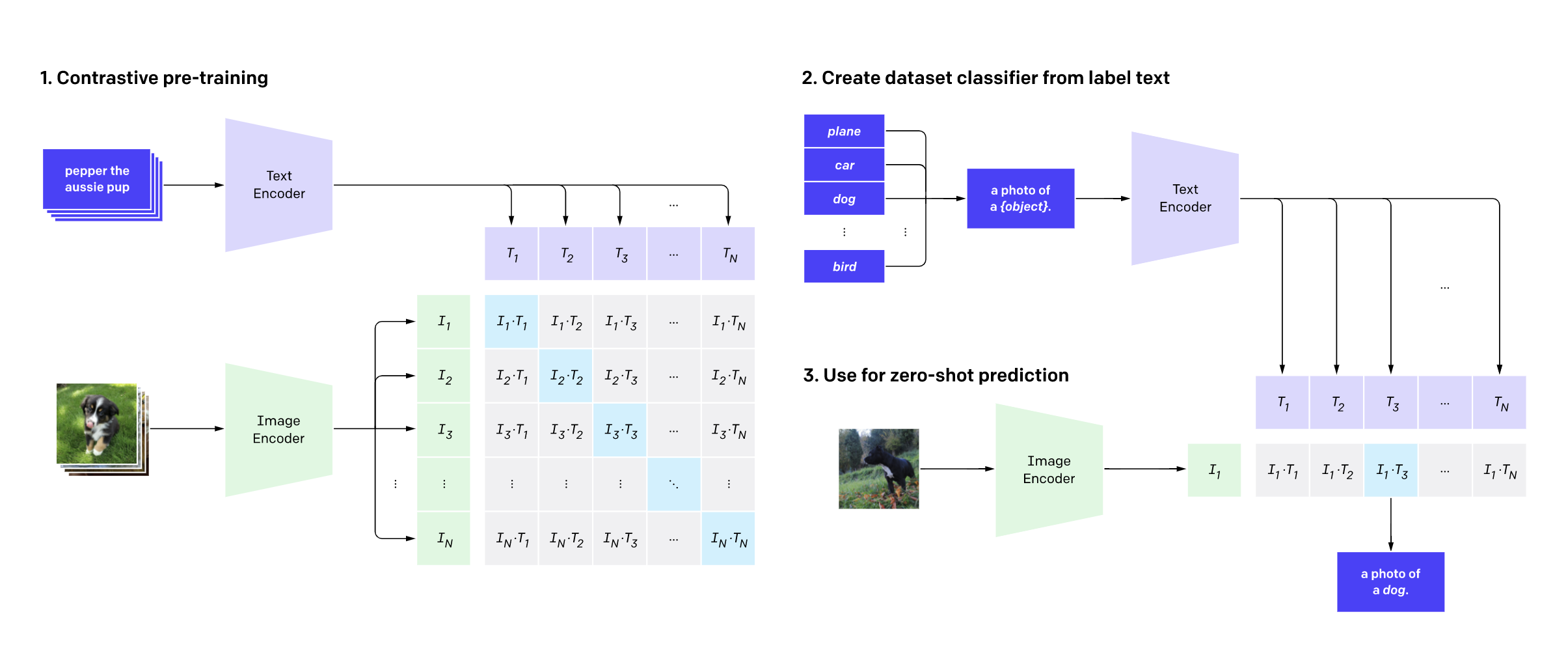

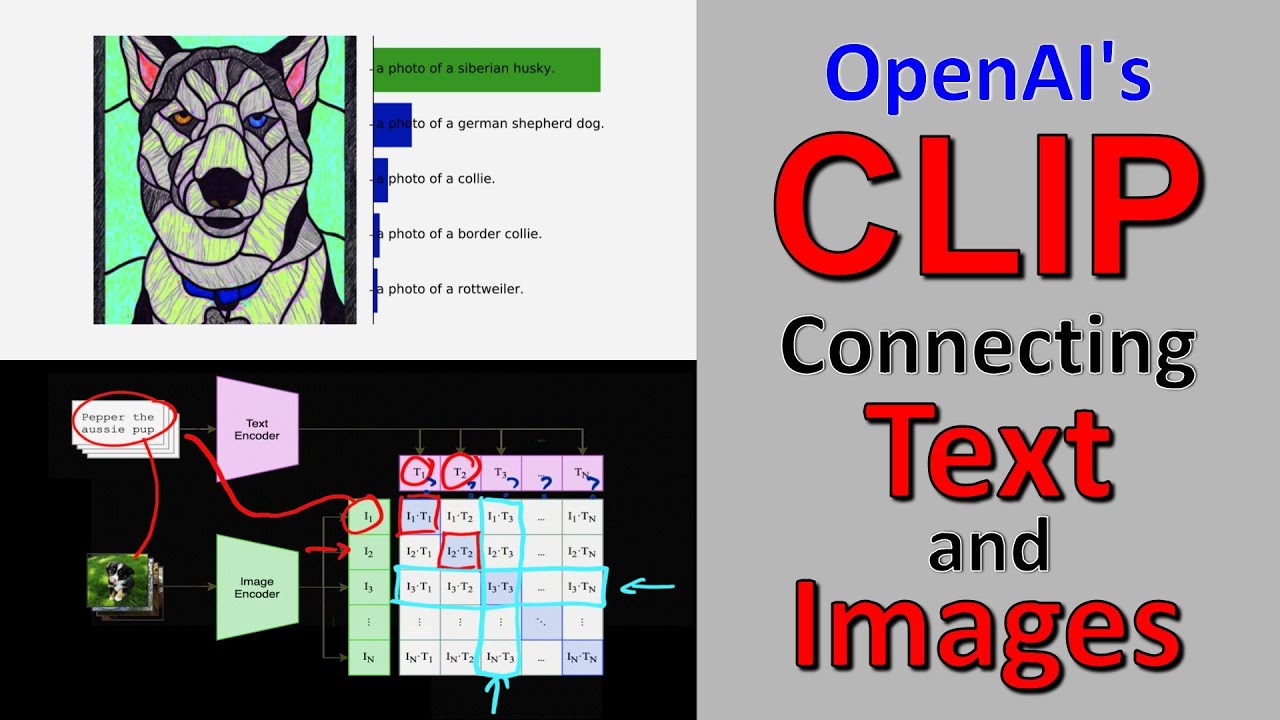

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

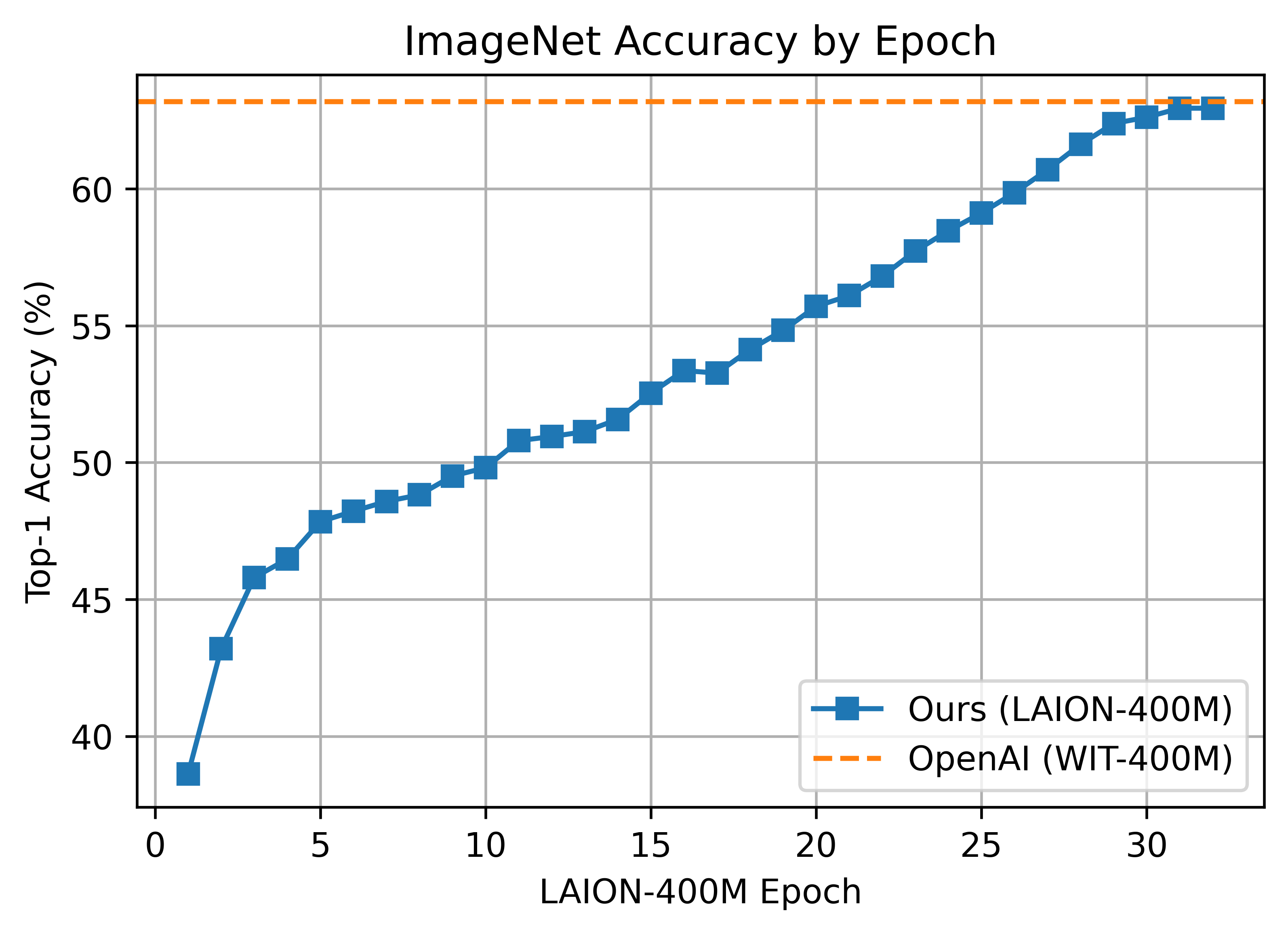

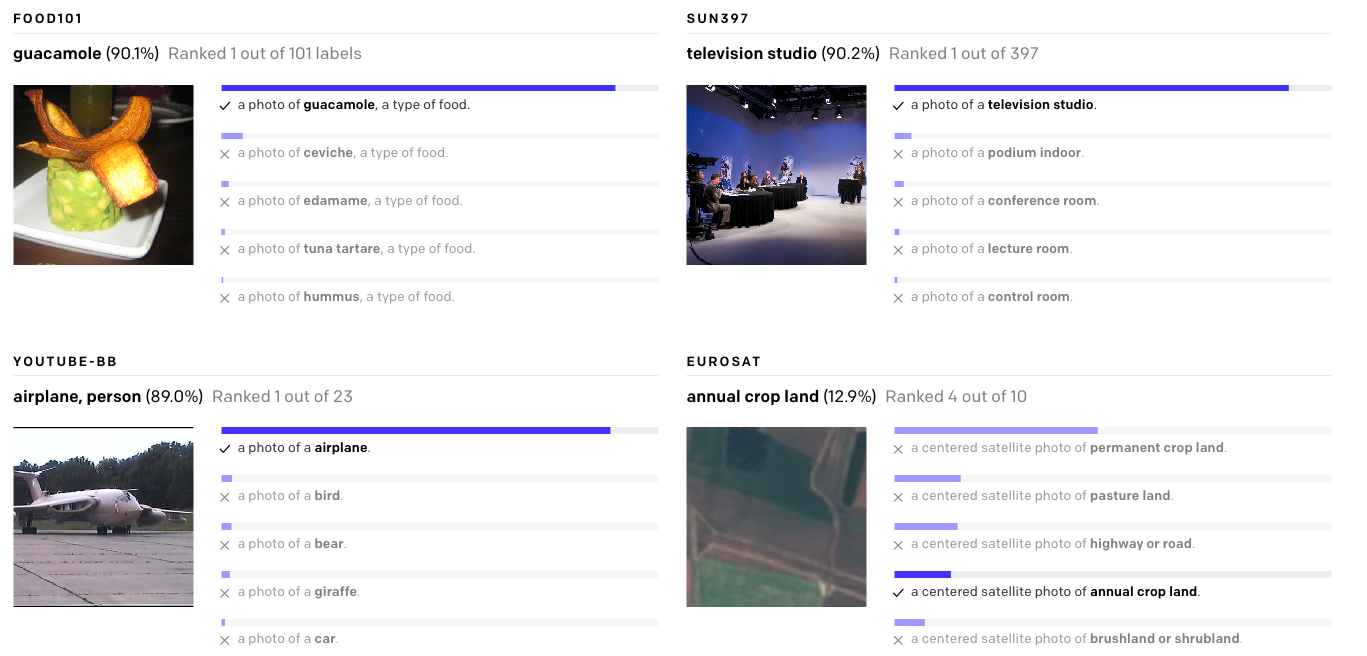

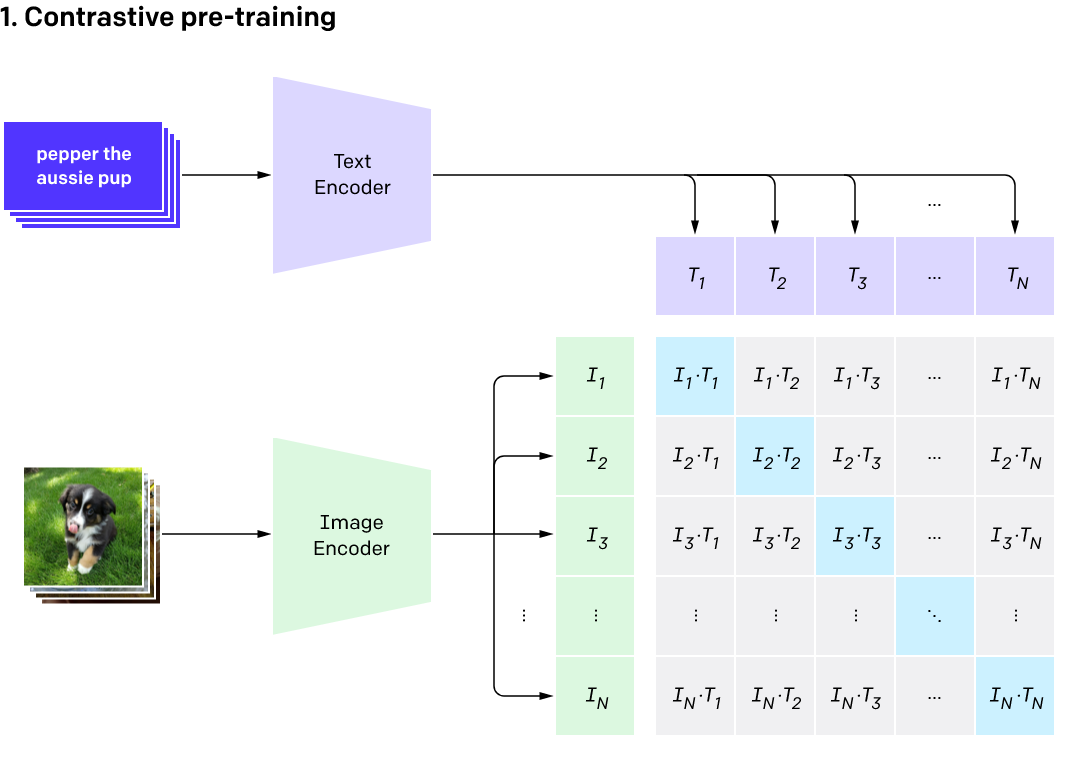

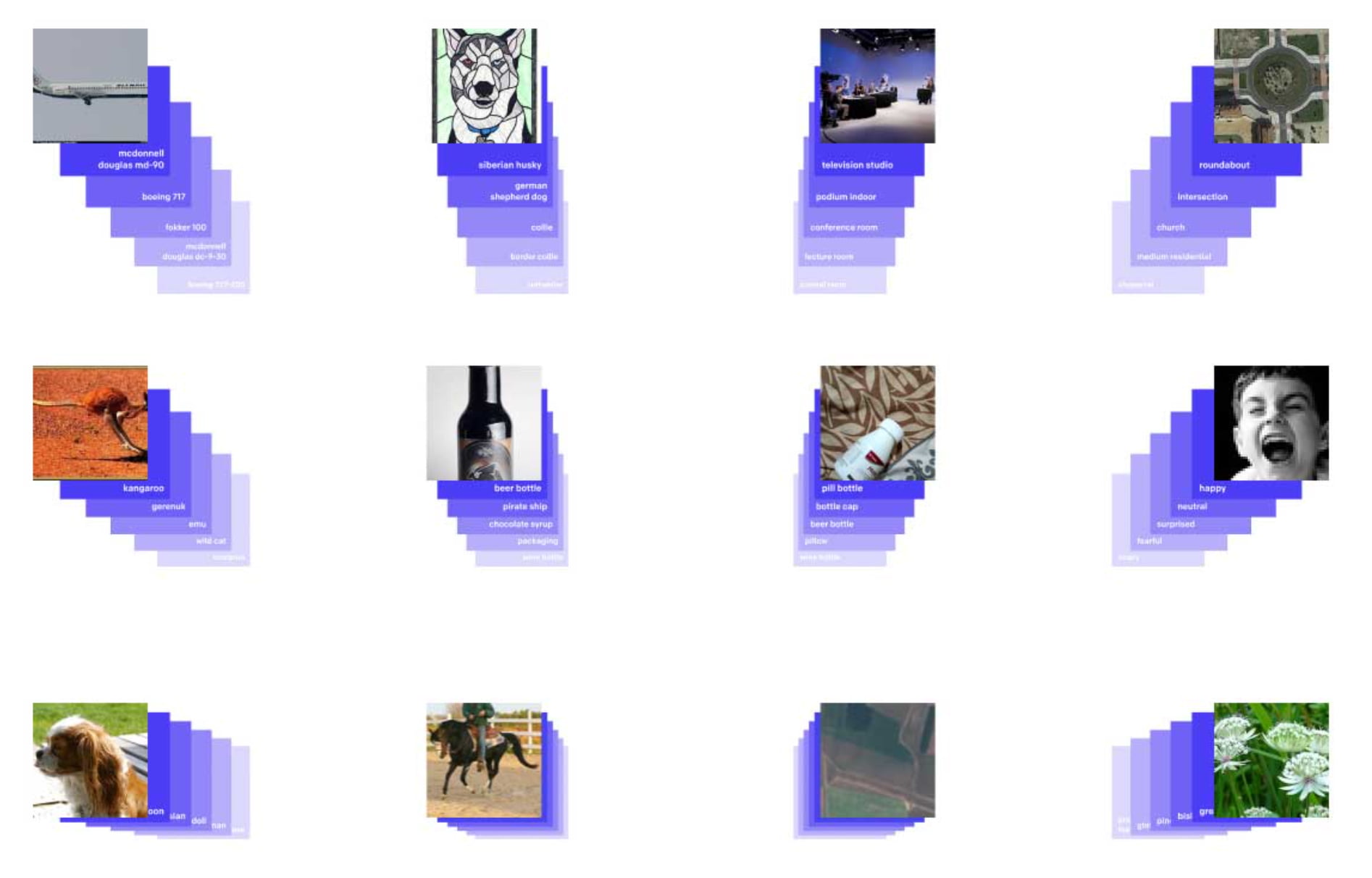

Using CLIP to Classify Images without any Labels | by Cameron R. Wolfe, Ph.D. | Towards Data Science

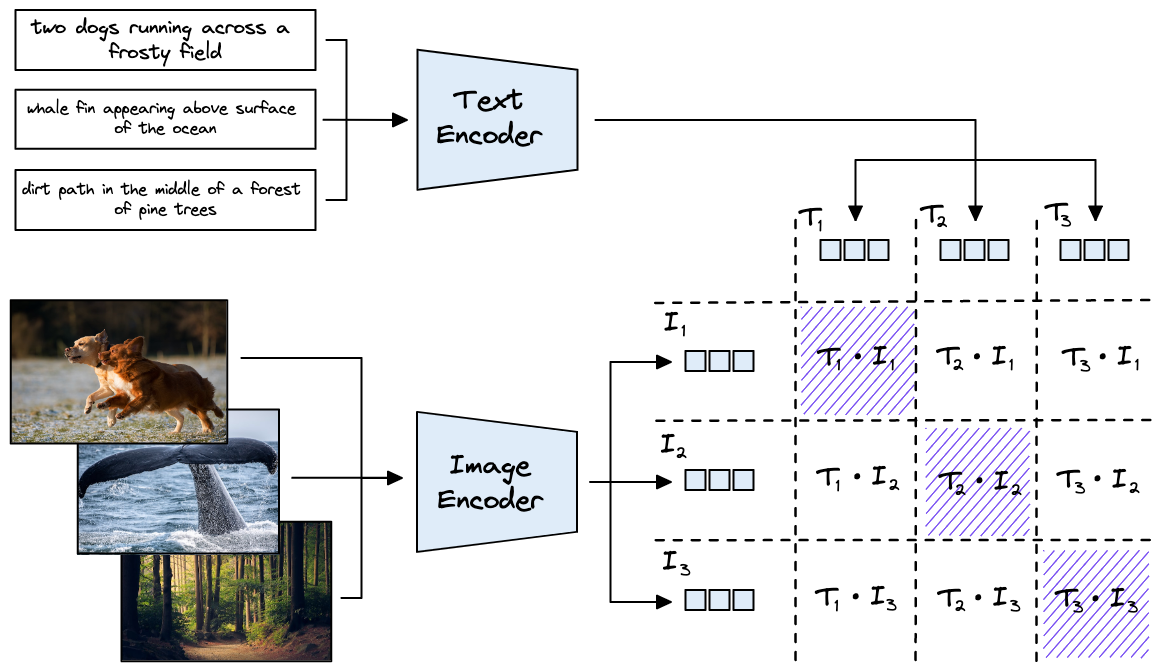

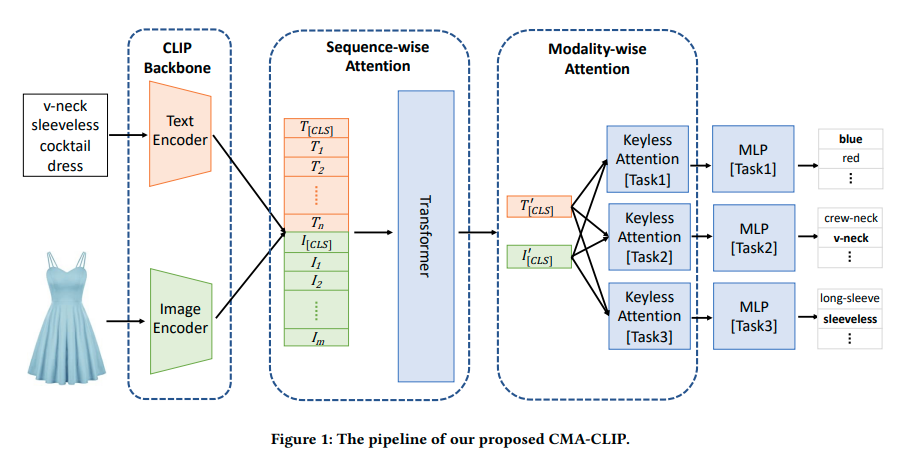

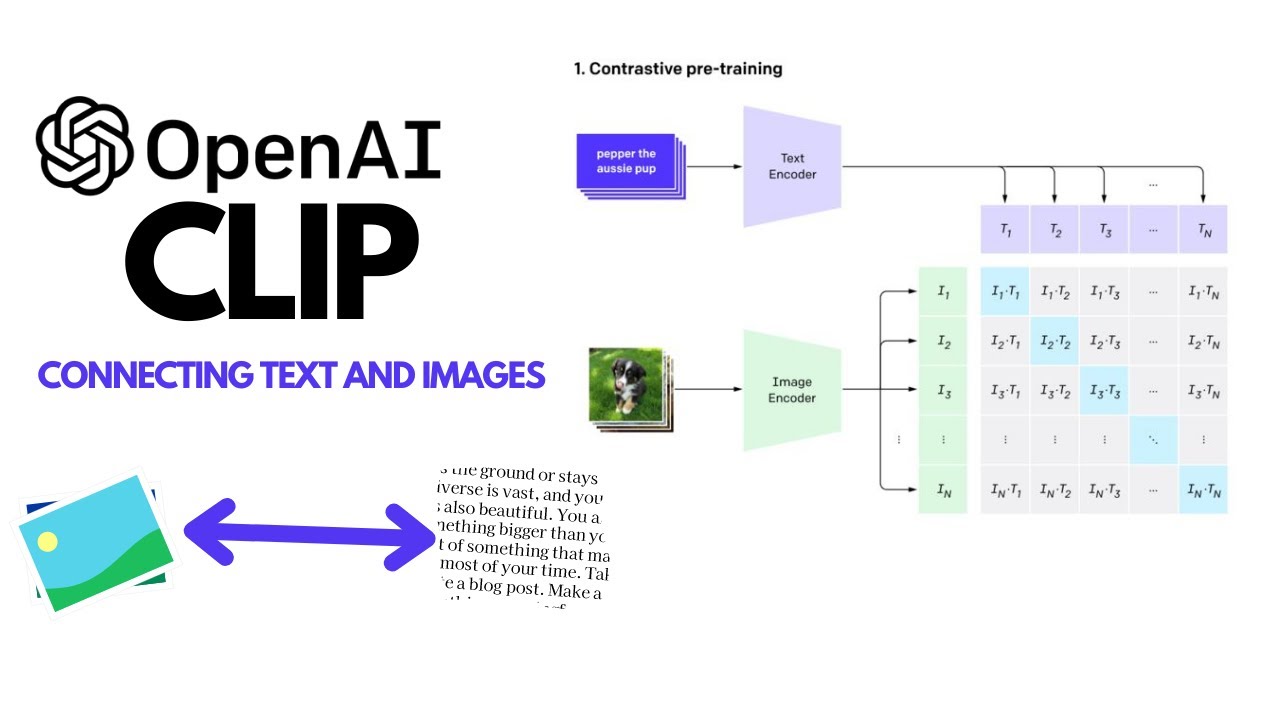

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

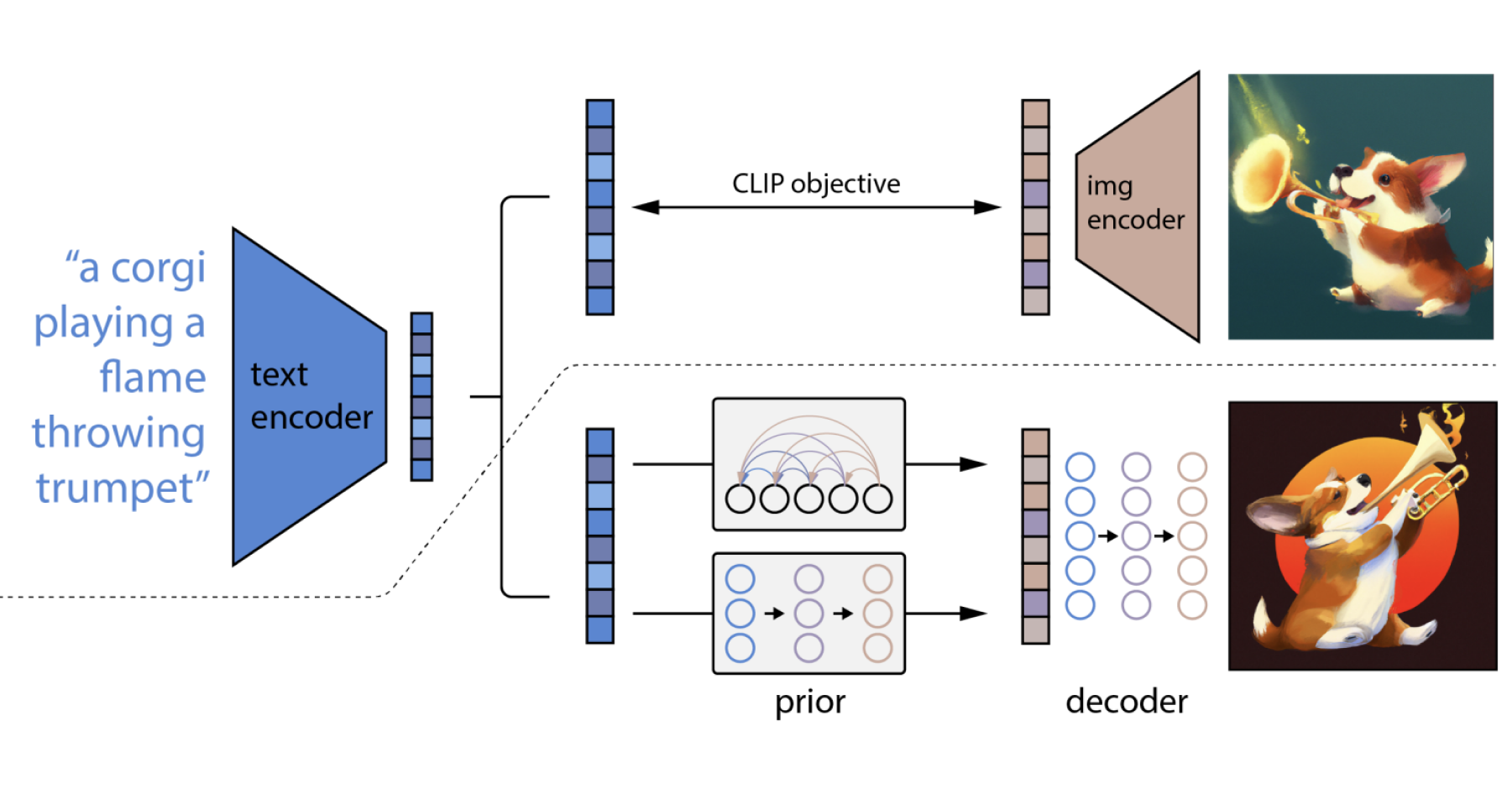

From DALL·E to Stable Diffusion: How Do Text-to-Image Generation Models Work? - Edge AI and Vision Alliance

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

Implement unified text and image search with a CLIP model using Amazon SageMaker and Amazon OpenSearch Service | AWS Machine Learning Blog